The 1cycle policy

Posted on Sat 07 April 2018 in Experiments

Here, we will dig into the first part of Leslie Smith's work about setting hyper-parameters (namely learning rate, momentum and weight decay). In particular, his 1cycle policy gives very fast results to train complex models. As an example, we'll see how it allows us to train a resnet-56 on cifar10 to the same or a better precision than the authors in their original paper but with far less iterations.

By training with high learning rates we can reach a model that gets 93% accuracy in 70 epochs which is less than 7k iterations (as opposed to the 64k iterations which made roughly 360 epochs in the original paper).

This notebook contains all the experiments. They are done with the same data-augmentation as in this original paper with one minor tweak: we random flip the picture horizontally and a random crop after adding a padding of 4 pixels on each side. The minor tweak is that we don't color the padded pixels in black, but use a reflection padding, since it's the one implemented in the fastai library. This is probably why we get slightly better results than Leslie when doing the experiments with the same hyper-parameters.

Using high learning rates

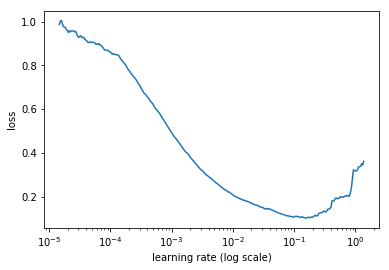

We have already seen how to implement the learning rate finder. Begin to train the model while increasing the learning rate from a very low to a very large one, stop when the loss starts to really get out of control. Plot the losses against the learning rates and pick a value a bit before the minimum, where the loss still improves. Here for instance, anything between \(10^{-2}\) and \(3 \times 10^{-2}\) seems like a good idea.

This was already an idea of the same author and he completes it in his last article with a good approach to adopt during training.

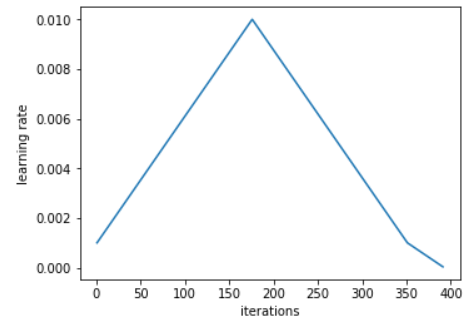

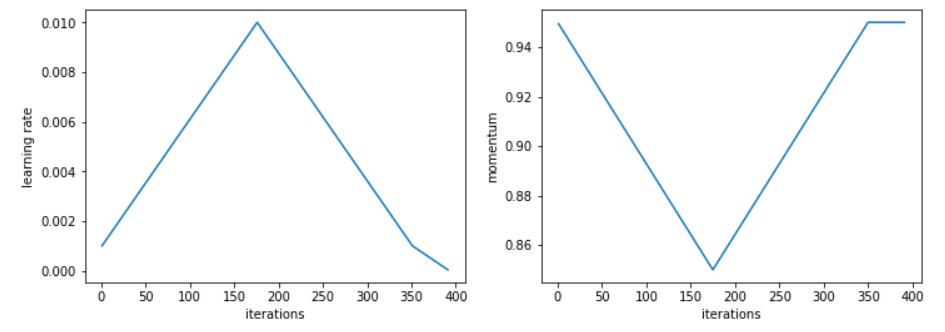

He recommends to do a cycle with two steps of equal lengths, one going from a lower learning rate to a higher one than go back to the minimum. The maximum should be the value picked with the Learning Rate Finder, and the lower one can be ten times lower. Then, the length of this cycle should be slightly less than the total number of epochs, and, in the last part of training, we should allow the learning rate to decrease more than the minimum, by several orders of magnitude.

The idea of starting slower isn't new: using a lower value to warm-up the training is often done, and this is exactly what the first part is achieving. Leslie doesn't recommend to switch to a higher value directly, however, but to rather slowly go there linearly, and to take as much time going up as going down.

What he observed during his experiments is that the during the middle of the cycle, the high learning rates will act as regularization method, and keep the network from overfitting. They will prevent the model to land in a steep area of the loss function, preferring to find a minimum that is flatter. He explained in this other paper how he observed that by using this policy, approximates of the hessian were lower, indicating that the SGD was finding a wider flat area.

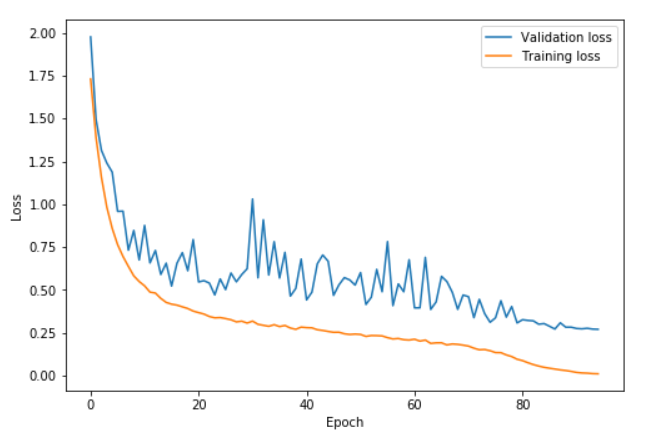

Then the last part of the training, with descending learning rates up until annihilation will allow us to go inside a steeper local minimum inside that smoother part. During the par with high learning rates, we don't see substantial improvements in the loss or the accuracy, and the validation loss sometimes spikes very high, but we see all the benefits of doing this when we finally lower the learning rates at the end.

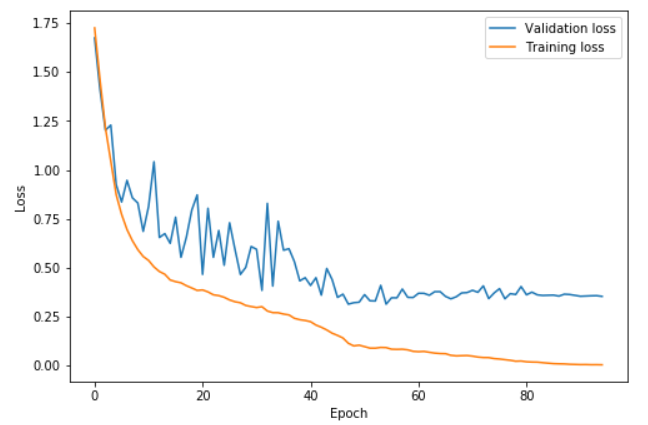

In this graph, the learning rate was rising from 0.08 to 0.8 between epochs 0 and 41, getting back to 0.08 between epochs 41 and 82 then going to one hundredth of 0.08 in the last few epochs. We can see how the validation loss gets a little bit more volatile during the high learning rate part of the cycle (epochs 20 to 60 mostly) but the important part is that on average, the distance between the training loss and the validation loss doesn't increase. We only really start to overfit at the end of the cycle, when the learning rate gets annihilated.

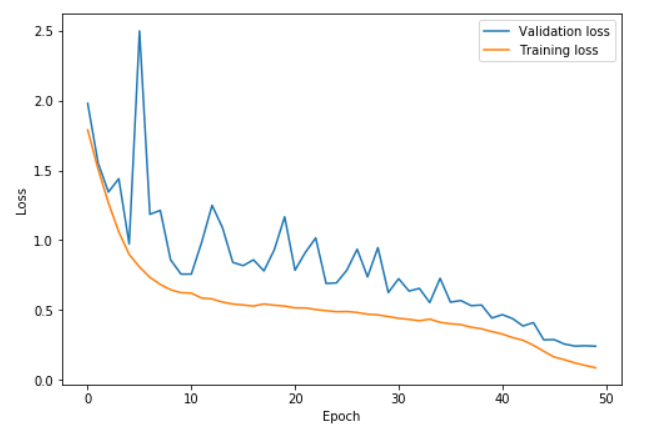

Surprisingly, applying this policy even allows us to pick larger maximum learning rates, closer to the minimum of the plot we draw when using the learning rate finder. Those trainings are a bit more dangerous in the sense that the loss can go too far away and make the whole thing diverge. In those cases, it can be worth to try with a longer cycle before going to a slower learning rate, since a long warm-up seems to help.

In this graph, the learning rate was rising from 0.15 to 3 between epochs 0 and 22.5, getting back to 0.15 between epochs 22.5 and 45 then going to one hundredth of 0.15 in the last few epochs. With very high learning rates, we get to learn faster and prevent overfitting. The difference between the validation loss and the training loss stays extremely low up until we annihilate the learning rates. This is the phenomenon Leslie Smith describes as super convergence.

With this technique, we can train a resnet-56 to have 92.3% accuracy on cifar10 in barely 50 epochs. Going to a cycle of 70 epochs gets us at 93% accuracy.

By opposition, a smaller cycle followed by a longer annihilation will result in something like this:

Here our two steps end at epoch 42 and the rest of the training is spent with a learning rate slowly decreasing. The validation loss stops decreasing causing bigger and bigger overfitting, and the accuracy barely gets up.

Cyclical momentum

To accompany the movement toward larger learning rates, Leslie found in his experiments that decreasing the momentum led to better results. This supports the intuition that in that part of the training, we want the SGD to quickly go in new directions to find a flatter area, so the new gradients need to be given more weight. In practice, he recommends to pick two values likes 0.85 and 0.95, and decrease from the higher one to the lower one when we increase the learning rate, then go back to the higher momentum as the learning rate goes down.

According to Leslie, the exact best value of momentum chosen during the whole training can give us the same final results, but using cyclical momentums removes the hassle of trying multiple values and running several full cycles, losing precious time.

Even if using cyclical momentum always gave slightly better results, I didn't find the same gap as in the paper between using a constant momentum and cyclical ones.

All the other parameters matter

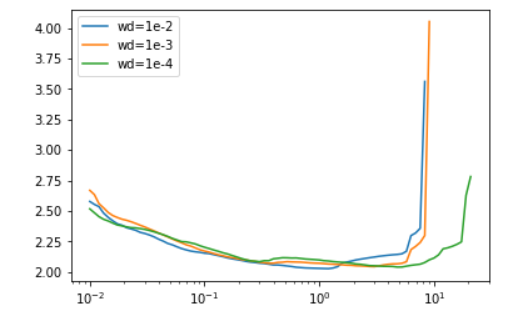

The way we tune all the other hyper-parameters of the model will impact the best learning rate. That's why when we run the Learning Rate Finder, it's very important to use it with the exact same conditions as during our training. For instance different batch sizes or weight decays will impact the results:

This can be useful to set some hyper-parameters. For instance, with weight decay, Leslie's advice is to run the learning rate finder for a few values of weight decay, and pick the largest one that will still let us train at a high maximum learning rate. This is how we can come up with the \(10^{-4}\) used in our experiments.

In his opinion, the batch size should be set to the highest possible value to fit in the available memory. Then the other hyper-parameters we may have (dropout for instance) can be tuned the same way as weight decay, or just by trying on a cycle and see the results they give. The only thing is to never forget to re-run the Learning Rate Finder, especially when deciding to pick a strategy with an aggressive learning rate close to the maximum possible value.

Training with the 1cycle policy at high learning rates is a method of regularization in itself, so we shouldn't be surprised if we have to reduce the other forms of regularization we were previously using when we put it in place. It will however be more efficient, since we can train for a long time at large learning rates.